Model building code – Vertex AI Custom Model Hyperparameter and Deployment

Once the Dockerfile is created, we need to work on the python code for the model building. The task is to build a classification model. Python code will be copied into the container and submitted for training job.

Let us go back to the terminal of the workbench and follow the given steps to create the Python code for model building.

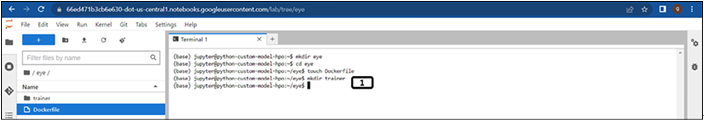

Step 1: Create a trainer folder

- Type the commands in the Terminal as shown in the Figure 5.6:

Figure 5.6: Trainer folder creation in Terminal

2. Then type the following command in the Terminal to create directory:

mkdir trainer

The command will create a new folder called Trainer. Open this folder.

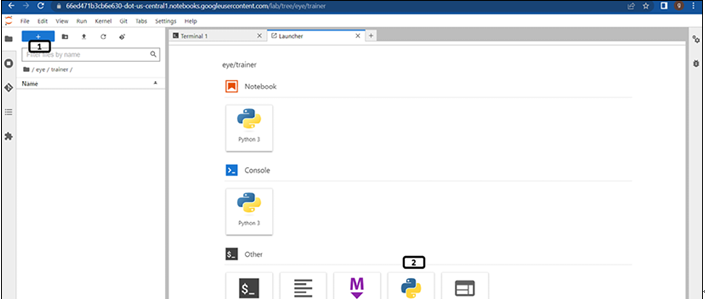

Step 2: Create a Python file

Follow the given steps and refer to Figure 5.7 to create a Python file (create the python file inside the trainer folder):

- Click on New launcher, which is the + button.

- Double click on the Python file.

Figure 5.7: Python file creation on workbench for model building

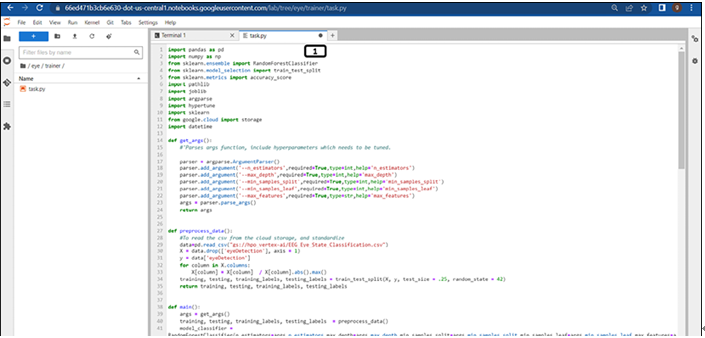

Step 3: Python code

A Python file will be created inside the trainer folder. Write the following given Python code in the py file:

import pandas as pd

import numpy as np

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

import pathlib

import pickle

import argparse

import hypertune

import sklearn

from google.cloud import storage

import datetime

def get_args():

Parses args function, include hyperparameters which needs to be tuned.

parser = argparse.ArgumentParser()

parser.add_argument(‘–n_estimators’,required=True,type=int,help=’n_estimators’)

parser.add_argument(‘–max_depth’,required=True,type=int,help=’max_depth’)

parser.add_argument(‘–min_samples_split’,required=True,type=int,help=’min_samples_split’)

parser.add_argument(‘–min_samples_leaf’,required=True,type=int,help=’min_samples_leaf’)

parser.add_argument(‘–max_features’,required=True,type=str,help=’max_features’)

args = parser.parse_args()

return args

def preprocess_data():

To read the csv from the cloud storage, and standardize

data=pd.read_csv(“gs://hpo_vertex-ai/EEG_Eye_State_Classification.csv”)

X = data.drop([‘eyeDetection’], axis = 1)

y = data[‘eyeDetection’]

for column in X.columns:

X[column] = X[column] / X[column].abs().max()

training, testing, training_labels, testing_labels = train_test_split(X, y, test_size = .25, random_state = 42)

return training, testing, training_labels, testing_labelsdef main():

args = get_args()

training, testing, training_labels, testing_labels = preprocess_data()

model_classifier = RandomForestClassifier(n_estimators=args.n_estimators,max_depth=args.max_depth,min_samples_split=args.min_samples_split,min_samples_leaf=args.min_samples_leaf,max_features=args.max_features)

model_classifier.fit(training,training_labels)

y_pred = model_classifier.predict(testing)

acc = accuracy_score(testing_labels, y_pred)

hpt = hypertune.HyperTune()

hpt.report_hyperparameter_tuning_metric(

hyperparameter_metric_tag=’accuracy’,

metric_value=acc)

artifact_filename = “model.pkl”

pickle.dump(model_classifier, open(artifact_filename, “wb”))

BUCKET = ‘hpo_vertex-ai’

gcs = storage.Client(project=”Vertex-ai”)

buck=gcs.bucket(BUCKET)

blob = buck.blob(artifact_filename)

blob.upload_from_filename(artifact_filename)

if name == “main”:

main()

Refer to the following Figure 5.8:

Figure 5.8: Python code for model building

Follow the corresponding points:

- Type the Python code.

- Right click and rename the Python file name to task.py.

- Save the python file and close the file.

The Python code consists of codes, to import required packages. The function get args contains the hyperparameters that need to be tuned and hyperparameters will be passed as arguments. In our exercise, we are considering five hyperparameters for the tuning they are:

• n_estimator

• max_depth

• min_samples_split

• min_samples_leaf

• max_features

max_features takes a string value as input and all the other hyperparameters take integer value.

Function preprocess data, reads the file, applies basic transformations and returns the split data. The main function builds the model and initiates the hyperparameter tuning of the model and saves the model to cloud storage.

You may also like

Archives

- September 2024

- August 2024

- July 2024

- June 2024

- May 2024

- April 2024

- March 2024

- February 2024

- January 2024

- December 2023

- November 2023

- September 2023

- August 2023

- June 2023

- May 2023

- April 2023

- February 2023

- January 2023

- November 2022

- October 2022

- September 2022

- August 2022

- June 2022

- April 2022

- March 2022

- February 2022

- January 2022

- December 2021

- November 2021

- October 2021

Calendar

| M | T | W | T | F | S | S |

|---|---|---|---|---|---|---|

| 1 | 2 | |||||

| 3 | 4 | 5 | 6 | 7 | 8 | 9 |

| 10 | 11 | 12 | 13 | 14 | 15 | 16 |

| 17 | 18 | 19 | 20 | 21 | 22 | 23 |

| 24 | 25 | 26 | 27 | 28 | ||

Leave a Reply